THINGS THAT THINK.

Our products

DAVINSY

Data driven AIoT control system

DAVINSY VOICE

Voice-Sound AIoT control system

DAVINSY MAESTRO

Development environment for DavinSy based AIoT system

Deeplomath Augmented Learning Engine

DALE is proprietary and does not require third party libraries. It offers a simple API. The dataset is built while operating real live data. DALE is fully generic, independent from the problem to solve (regression/classification) and the application domain. The algorithm is certifiable and deterministic with no stochastic component. DALE builds the neural network in a single pass and does not require hyper-parameter tuning. The memory footprint of the code has been hyper-miniaturized down to only two digits in KB.

When using DALE, the user solely needs to precise the type of problem to solve (classification or regression), the accuracy vs memory tradeoff and the rejection vs acceptance threshold in open-set situations.

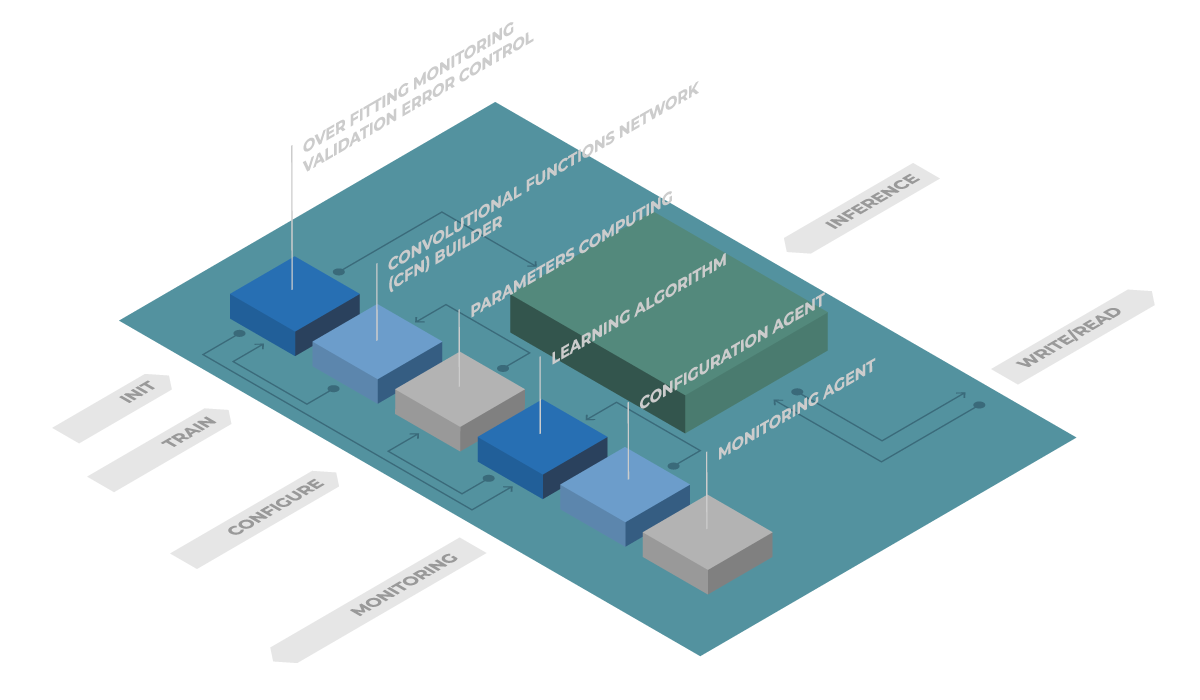

The main functions of Deeplomath Augmented Learning Engine are:

- Learning Algorithm : Orchestrates model building without any user intervention

- Parameters Computing : Computes the parameters for each of the functional nodes of the network

- Convolutional Functions Network builder : Stacks layers

- Overfitting Monitoring by validation and learning errors control : Stops training to avoid overfitting

- Inference : Data processing using existing models for closed or open-set problems (rejection).

- Monitor Agent : Computing KPIs input database profiling.

In industrial or professional use cases, data scientists struggle to build a large data set that represents the reality of use. The difficulty of capturing and collecting real, high-quality live data is a major barrier to deploying AI.

To be successful, the training phase requires huge resources (large processing power and huge data storage). It includes iterative and time-consuming hyper-parameters optimization by trials and failures. The resulting models are heavy and cannot be embedded without pruning and quantization.

By comparison, thanks to its new mathematical foundations, the efficiency of Deeplomath’s workflow is up to 100,000 times faster than the classical iterative semi-manual workflows. DALE has been created to make learning possible on scarce datasets (tens of records versus millions). This corresponds perfectly to the nature of real live data. Finally, DALE allows building tiny models just-in-time on low-power processors or even microcontrollers without any hardware AI accelerator.

Because of its frugality, Deeplomath can run on very constrained devices, on the very edge, closest to the capture of the real live data by sensors. It can learn amazingly fast, enabling continuous learning and just-in-time adaptation of models. It allows a new workflow, breaking the paradox that static models face live data only once deployed after pruning and quantization and therefore cannot learn from them.

Moreover, by allowing processing of complex data on the edge, Deeplomath Augmented Learning Engine solves several problems inherent to IoT:

- It enforces the user privacy by preventing the sending of data to the cloud

- It increases product reliability by removing dependency to connection

- It improves product reactivity by processing data just-in-time

- It decreases power usage by preventing useless communications

- It lowers the bill of materials, because it can run on standard hardware (no need for hardware accelerators)

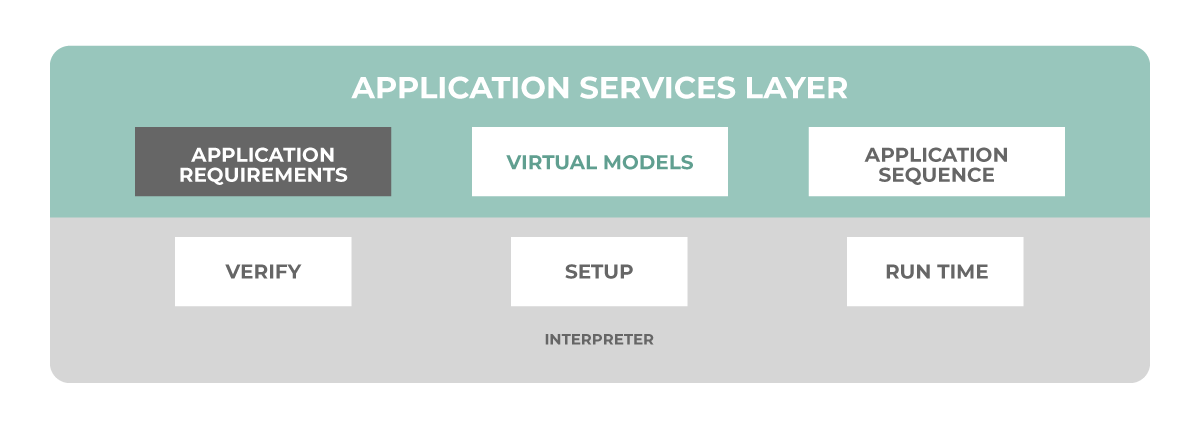

Davinsy Application Services Layer

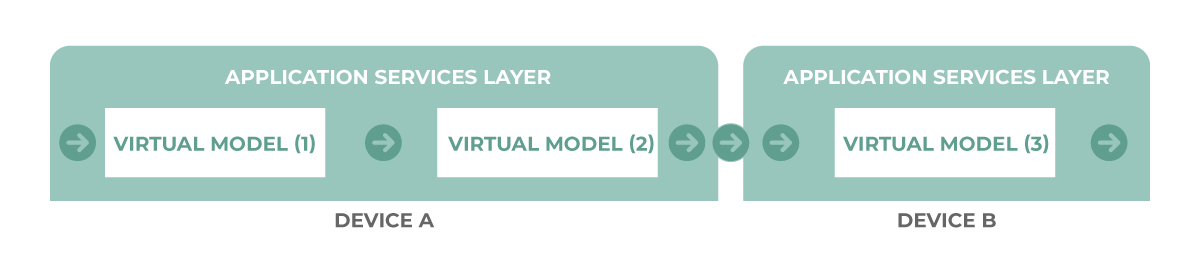

Davinsy ASL offers a high-level software interface to describe the problems resolution and the conditional sequence. Complex problems are divided smaller and dedicated ones. For example, multi-labels problems (identified the speaker and the intent) generate large network difficult to tune. Splitting in two networks is more efficient, in term of resource allocation, performance and explainability. Each of those problems is described by the set of preprocessing and DALE configuration parameters. The concept of the Virtual Model is a new method in Davinsy system to override the behaviour of the DALE engine to achieve polymorphism in regenerated models. Thanks to DALE genericity, ASL can operate in multi-modal mode in the targeted application chaining multiple Virtual Models.

To define how those models connect, we introduce “Application Sequences” that are executed by the Application Service Layer. Application Sequence describes how the data flows through the models, choosing the model to execute depending on result of the previous one.

All services are configured using high-level parameters reflecting your application constraints as show in the following figure:

There are three major phases in the application life cycle after the download of the application package:

- Verification phase: At installation time, Davinsy ASL will check the compatibility with the device resources,

- Setup phase: At installation time, after Verify, Davinsy ASL will store the virtual models, allocate minimum space in the database & memory.

- Run time phase: During this phase, Davinsy ASL will receive internal events (new inference result …) and external events (new data from sensor …) and launch the appropriate tasks (store, preprocess, train, infer, postprocess …) as defined in the Application Sequence

Streamlined by Davinsy Maestro, Davinsy ASL can interpret the complete description of the application flow within one environment.

For example, as shown in the following figure, for secured voice control application, there are three Virtual Models: Voice Activity Detection, Speaker Identification, Command Identification.

The application will only infer on speaker ID network when the voice is detected, otherwise the system stays idle, by consequence, the power budget is optimized.

When an authorized user is identified, the application will infer using his optimized and personalized network to determine the command.

The application service layer is designed to support multi-devices architecture (on the same board or over a network)

Instantly, the ASL will interpret the application sequence and call the inter-devices communication module to transmit the event.

The complete application description (Application sequence, all associated Virtual Models and resource requirements) is totally device agnostic.