DaVinSy

DAVINSY DATA-DRIVEN AIoT CONTROL SYSTEM

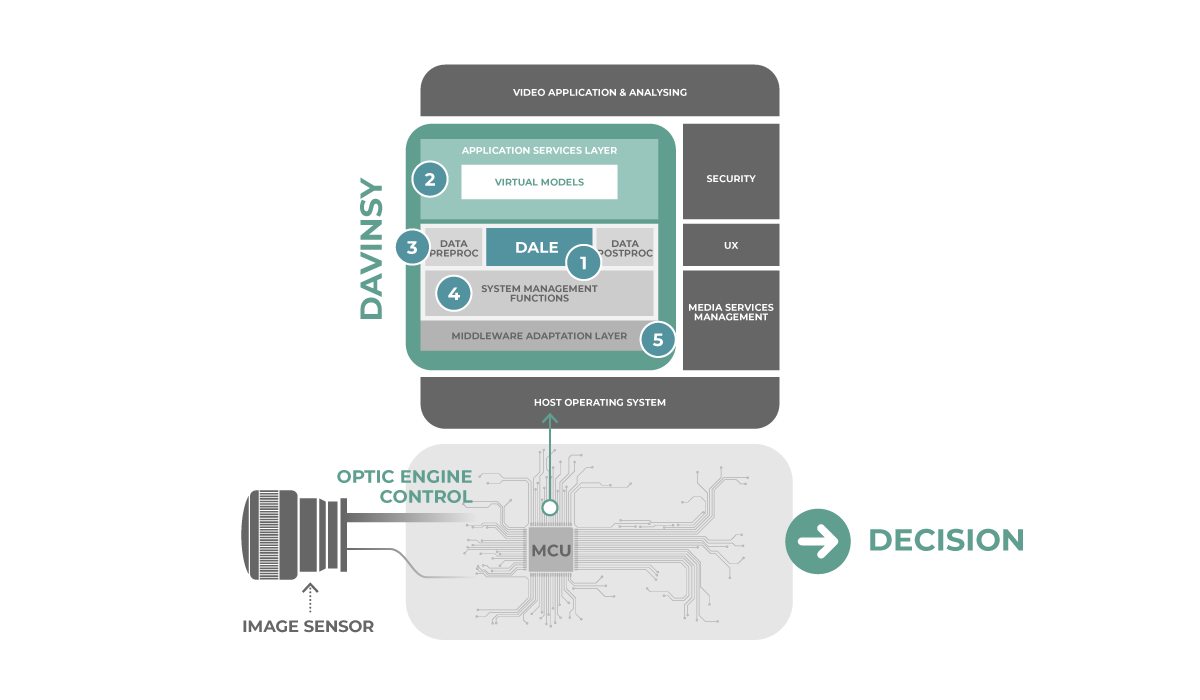

Bondzai’s DavinSy is an embedded software system. DavinSy accelerates the integration of complete deep learning AI workflows in industrial embedded systems.

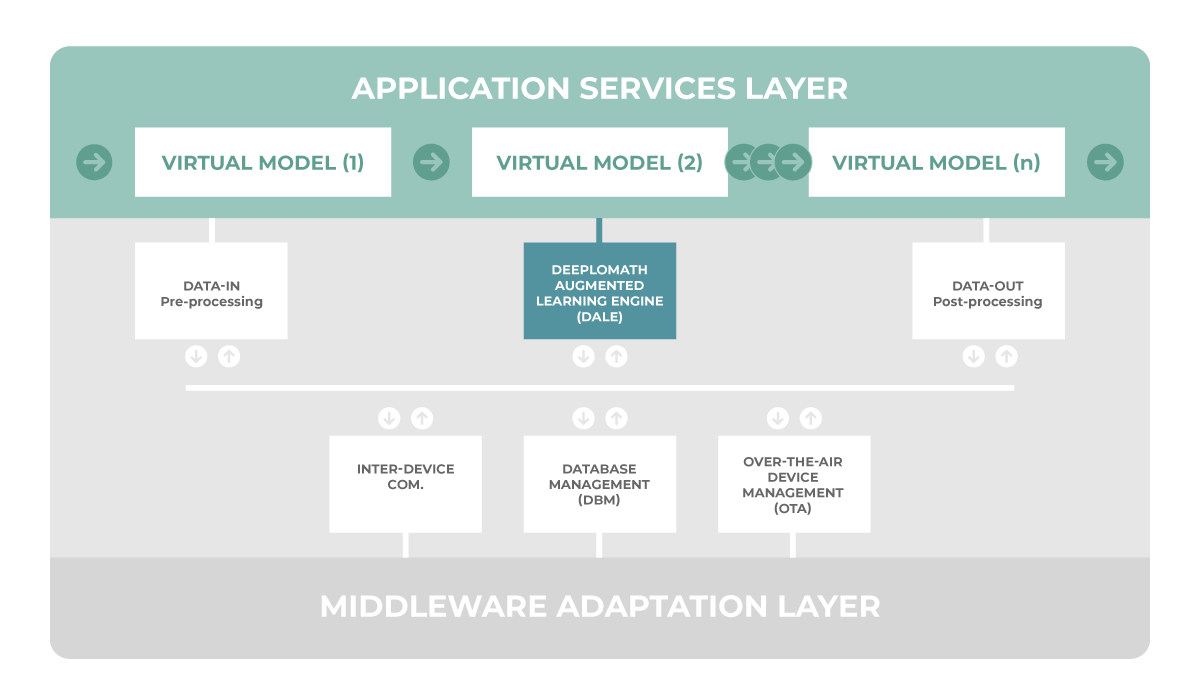

DavinSy continuously learns from real live data to solve the toughest problem with today’s classic static AI models : drift from data over time. To achieve this DavinSy adapts the models just-in-time meeting the variabilities of live data. DavinSy exploits the new concept of Virtual Models as a new programming method proposed in the Application Services Layer (ASL). Virtual Models pilote DALE (Deeplomath Augmented Learning Engine), DavinSy’s internal deep AI . DavinSy achieves polymorphism through locally built models.

If the data structure changes requiring the models to be adapted to a new situation, the models will be re-built automatically by learning directly the structure extracted by DavinSy data pre-processing functions.

The system is a cross-platform software interfaced with most of the embedded environments. DavinSy is built around few major functional blocks and an innovative AI Engine called DALE.

DavinSy Voice illustrates DavinSy operating in a real implementation for voice-control applications.

Deeplomath Augmented Learning Engine (DALE)

SEE MORE DETAILS

The engine is proprietary and does not require any third-party library. It offers a simple API with only a few parameters to configure. The dataset is built while operating real live data. DALE is fully generic, independent from the problem to solve (regression/classification) and the application domain. The algorithm is certifiable and deterministic with no stochastic component. For each dataset, it constructs the associated deep neural network in one unique pass without any hyper-parameter to tune. The memory footprint of the code is less than 16KBytes.

DALE parameters are limited to the type of problem to solve, the accuracy vs memory tradeoff and the rejection vs acceptance threshold in open-set problems.

Application Services Layer (ASL)

SEE MORE DETAILS

ASL offers a high-level software interface to describe the problems resolution and the conditional sequence. Complex problems are splitted into simpler ones. For example, with static AI models, multi-label problems (e.g. identification of the speaker and the intent) requires large networks difficult to tune. Splitting in two networks is more efficient in term of resource allocation, performance and explainability. Each of these problems is described by the preprocessing and DALE configuration parameters. The concept of the Virtual Model is a new method in DavinSy system to override the behaviour of the DALE engine to achieve polymorphism in regenerated models. Thanks to DALE genericity, ASL can operate in multi-modal mode in the targeted application chaining multiple Virtual Models.

We provide a large open library of preprocessing algorithms, including classical audio, image and inertial DSP transforms (MFCC, Voice Pitch, spectrums, image reshaping….) and upper layers of existing open source Neural Networks as features extractors (ResNET like, voice X-Vectors,…). The library as well contains a set of data-augmentation functions.

The Post-Processing library is a set of application rules, their purpose is to transform different inference results into a final decision.

System Management Functions

Davinsy handles Remote device management (OTA) for monitoring and modular firmware updates. It supports communications between several instances of Davinsy (between different CPU or devices on the same network). It includes the Database Management Module (DBM) handling the dataset, Virtual Models and networks storage, check-points creation, import and export of data for collaboration and roll-back, monitoring, cleaning policy based on data freshness and quality, DALE indicators and memory capacities.

DavinSy initials implemented characteristics

| Memory Footprint | Can be as low as 8kB of RAM and 32kB of flash depending on use case |

| Performances | Training < 2s – Inference < 100ms for a typical voice use case |

| OS | Windows – Linux – Android – RTOS |

| API Language | C – Python – Java |

| Data source | Any: voice – sound – inertial – 1D sensors – image – video... |

| Multi-applications | When memory is available, simultaneously run several applications |

| Applications management | Leveraging on DavinSy Maestro you can download or erase remotely Application on your product |

Zero Dataset

You can start right now, no need for months of collecting or buying gigabytes of datasets. DavinSy will use the raw data it collects on the field. We thus ensure total privacy and confidentiality of your application.

Zero Code

Through DavinSy Maestro graphical interface you describe your application. The resulting Application Sequence can then be used directly on any DavinSy enabled device.

Really small

DavinSy runs on the smallest systems. For example, you can run a Voice Activity Detection + Speaker ID Recognition Application Sequence on a MCU with 128kb of RAM and 512Mb of flash.

Amazingly fast

On an 80Mhz MCU, training time is a matter of few seconds (depending on the size of the dataset).

Continuous learning becomes possible enabling your product to adapt to any condition.

The inference time on its side is under 200ms.

Portable

Once your Application Sequence is defined you will be able to upload it on any device running DavinSy.

It will then adapt automatically to the available resources. The mapping of the resources is done by the Middleware Adaptation Layer.

Secure

DavinSy is secure by design because it minimizes the exchanges with the cloud.

All communications (device to device or device to cloud) are ciphered end-to-end.

DavinSy leverages on Memory Protection Units available on recent MCU to protect your data.

Multimodal

Thanks to the Application Sequence, you can handle several different data sources (sound, image, 1D sensor) simultaneously.

For example, mix voice and images to better recognize your user.

Collaborative AI

DavinSy can natively distribute the Virtual Models on the local network. Devices will discover each other and start to collaborate.

Small devices can delegate heavy tasks to bigger ones. Several devices can collate their results for collaborative decision.

Remote management

DavinSy’s OTA (Over-The-Air) capabilities, enables remote Application Sequence downloading. You can enrich your products on the field with new features, for example you can add intrusion detection to your voice assistant.

Low Power

Davinsy is event-driven, thus, it does not consume power when there is no activity.