Davinsy, our autonomous machine learning system, integrates at its core Deeplomath, a deep learning kernel continuously learning from real-time incoming data. This paper describes the main characteristics of Deeplomath. In particular, we emphasis on the impacts of these characteristics on:

- Computational and memory efficiencies

- Avoiding model drift through event-based continuous few-shot learning

- Ease of deployment and maintenance (MLOps)

Predefined network architecture and backpropagation are limitations

Let us start recalling the main characteristics of the state of the art in deep convolutional and recursive neural networks:

- The need for an a priori definition of the architecture of the network (number and types of layers, neuronal density, etc.) before the identification of the parameters via the solution of an optimization problem by back-propagation minimizing the learning error between the model and a database, while controlling the validation error.

- Beyond the architecture, the need to calibrate the hyper-parameters driving, in particular, the optimization phase.

- The need for a large database, not only to achieve a network giving good performance in inference, but also to be able, thanks to the richness of the data, to effectively recognize unknown situations. Otherwise, the model always provides an answer corresponding more or less to the closest scenario in the learning dataset. Obviously, this is not necessarily suitable as the closest can be still quite far and inadequate.

The new way of Deep AI life: adaptive real-time learning

Deeplomath approaches the problem in a different way by removing the previous limitations that weigh on the deployment and use of deep networks in industrial applications:

- Encapsulate all the functionalities of the network layers within a single type of functional layer: the user no longer has to define the types of layer to be chained.

- Automatic identification of the depth of the network and the neural density by an original heuristic allowing joint identification of the parameters of the functions constituting the layers of our “function network”, thus avoiding the need for the solution of an optimization problem by back-propagation.

- Identification of the geometry of the contour of the “known set” allowing the rejection of ‘unknown’ scenarios without any a priori knowledge other than event-driven gathered information.

- Real-time reconstruction of the network, thanks to the low computational complexity of its heuristic, allowing adaptation to changes in the environment without the need for uploading to the cloud for learning.

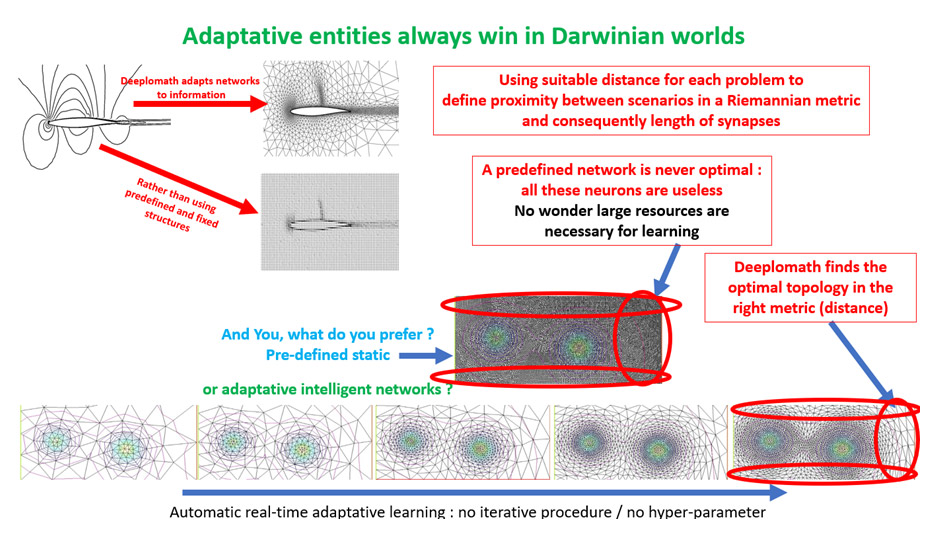

The figure illustrates the Deeplomath strategy by analogy with the adaptation of unstructured spectral finite element meshes by metric control.

What about latency?

The latency induced by uploads to the cloud, the non-automaticity of learning procedures and the cost of calculation are serious obstacles to the deployment of deep AI. From an AI perspective, Deeplomath is a zero-dataset AI (i.e. starting learning with the continuous arrival of information) and integrating a model generator, updated with the arrival of new information. This makes it possible to adapt over time to changes in environment, to noise for example, and thus ensure performance resilience and avoid model drift.

Do you known linear systems? Gauss vs. Gradient Methods?

Let us give an analogy to understand Deeplomath’s heuristics compared to ‘back-propagation’ optimization methods:

Consider solving linear systems (https://en.wikipedia.org/wiki/System_of_linear_equations). Two classes of methods exist: direct methods and iterative methods. The formers are very precise and insensitive to numerical problems, such as the conditioning of the matrix, but have difficulties when the size of the system increases. The seconds are not limited by size, but are delicate to implement, do not always converge, and we do not know a priori how many iterations will be necessary. They are, moreover, very sensitive to small numerical disturbances when the conditioning is large and are based on iterative gradient-based minimization algorithms precisely. Deeplomath is of the first class, it is a direct deterministic method operating when the size of the system remains moderate. However, this size limitation is not one precisely when one aims for personalized, scarce event-driven embedded learning. Actually, keeping the model small is a must-have and not optional.

Mainstream Deep Learning means tremendous computing power

It is well known that deep learning of big datasets requires powerful servers using GPUs[1]. This is due to the fact that neural networks get larger and larger with more and more layers and parameters requiring increasingly large training databases. Embedded learning (on a microcontroller) is never considered and even inference on a microcontroller is problematic. Actually, there are indications that Deep Learning is approaching computation limits and what is economically and societally acceptable (https://venturebeat.com/2020/07/15/mit-researchers-warn-that-deep-learning-is-approaching-computational-limits/). This leads to diminishing returns from machine learning which already has major problems for being industrially deployed (https://www.predictiveanalyticsworld.com/machinelearningtimes/deep-learnings-diminishing-returns/12298/). In other words, the cost of improvement of the models is becoming unsustainable (https://spectrum.ieee.org/deep-learning-computational-cost).

Davinsy’s Deep AI way of life is but by design “low-carbon”

This constraints does not exist with Deeplomath event-driven embedded continuous few-shot learning producing adapted networks. The cost of one full Deeplomath learning is in average 5 orders of magnitude smaller than the state-of-art neural networks learning. Actually, one full learning costs less than one iteration of backpropagation. In addition, as no data is being transferred, everything being treated at the acquisition locations, the corresponding footprint simply vanishes. Most of Davinsy workflow is seamless for the hardware.

Impact on MLOps and AI deployment

The fact that with Deeplomath the models continuously adapt to their environments hence avoiding drift makes MLOps[2] straightforward. Indeed, deploying and operationally maintaining the performance of ML models is probably the biggest challenge and problem for the introduction of AI in industrial applications (https://www.phdata.io/blog/what-is-the-cost-to-deploy-and-maintain-a-machine-learning-model/).

[1] A single V100 GPU can consume between 250 and 300 watts. If we assume 250 watts, then 512 V100 GPUS consumes 128,000 watts, or 128 kilowatts (kW). Running for nine days means the MegatronLM’s training cost 27,648 kilowatt hours (kWh).

[2] MLOps is the process of developing a machine learning model and deploying it as a production system. Similar to DevOps, good MLOps practices increase automation and improve the quality of production models, while also focusing on governance and regulatory requirements. MLOps applies to the entire ML lifecycle —- from data movement, model development, and CI/CD systems to system health, diagnostics, governance, and business metrics.