This paper describes how we proceed with continuous Machine Learning (ML) through deep few-shot learning at Bondzai and why we think this is a ‘must-have’ when targeting industrial applications where data is scarce and variability the rule not the exception making the consideration of real live data through an event-driven architecture necessary. We show how this leads to a Deep Reinforcement Learning framework.

Davinsy and real-time few-shot learning

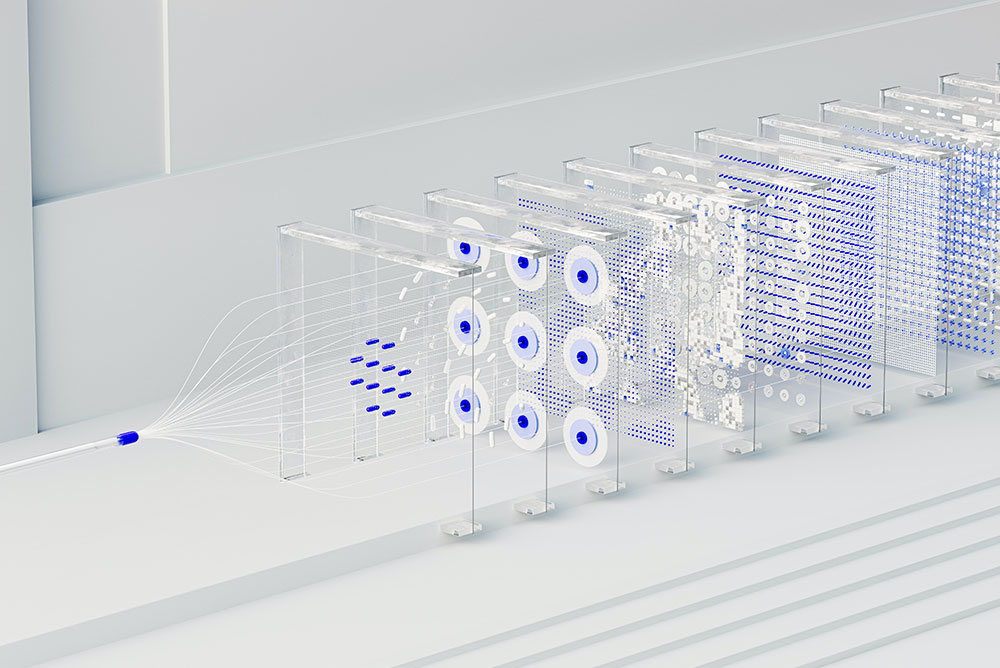

Davinsy, our autonomous machine learning system, integrates at its core Deeplomath[1], a deep learning kernel continuously learning from real-time incoming data.

Real-Time ML. ML is the process of training a machine learning model by running live data through it. Current solutions try to digest the new data to continuously improve[2] the model. This is in contrast to “traditional” machine learning, in which a data scientist builds a model using large computing resources and static datasets. Real-time ML is necessary when there is not enough data available for training, and in cases where data needs to adapt to new patterns or variabilities. This is very current in applications where variability is the rule not exception. Variabilities can come from user behaviors or devices in the acquisition chain. In the IoT word variability is everywhere. A model will be inoperative if it does not account for these variabilities. Bondzai’s few-shot learning embed a ‘support’ labelled dataset of raw data to adapt to additive noises. If noises change, Bondzai rebuilds its network using the noise-augmented dataset.

What about the others?

This observation is now widely shared by the major players in the field, noting the need to adapt models which growing require updates and call for increasing resources. Indeed, tentative of continuous learning to reduce model drift requires important GPU resources.

Event-driven vs. Intent-driven

Davinsy has an event-driven architecture. This in opposition to traditional intent-driven AI approach where the user is in charge of deciding if a new training is necessary or not and how the dataset should be upgraded. This is the natural way to proceed in continuous learning. Davinsy considers data stream (i.e., a continuous flow of incoming data which can be IMU, audio, image and so forth). Davinsy data pipeline modifies the model (as well as the reference dataset that the Deeplomath model is built on) using live data. Deeplomath acts to build a new model when a new correction-event is detected. This contrasts with traditional architectures dealing with a dataset updated time to time after a user request and not when new information is available. Not all events necessarily trigger a ‘build’ action by Davinsy. The new build is launched only if a correction is made by the user to the Deeplomath inference prediction.

Real-time learning and inference with Davinsy

Davinsy addresses both levels of real-time ML:

- predictions in real-time.

- incorporating new data and rebuilding the Deeplomath model in real-time (continuous learning).

The first level is about reducing as much as possible the latency in inference making it on the fly instead of using batch predictions. Deeplomath models do not follow the mainstream approach of building the model then compressing it, pruning and so forth. Deeplomath models are by design optimal as built at the endpoint using live pertinent data of interest and not using some large static dataset to tune the parameters of a predefined large network through iterative optimization. With Davinsy, real-time inference derives from quasi-real-time learning.

Impact on MLOps and deployment

This makes deployment much easier as it removes the necessity to proceed with what is usually done to make predictions faster (beyond switching to faster hardware):

- Inference optimization: distributing computations, memory footprint optimization, writing high performance kernels targeting specific hardwares, etc.

- Model compression: Originally, this family of techniques is to make models smaller to fit the edge devices.The most common technique for model compression is quantization, e.g., using 16-bit floats (half precision) or 8-bit integers (fixed-point) instead of 32-bit floats for model weights. BinaryConnect and Xnor-Net have even attempted 1-bit representation (binary weight neural networks). Model compression is kind of incompatible with learning at the endpoint with no user intervention.

In all cases, these techniques are barriers to MLOps and fast deployment.

Of course, Davinsy can be optimized for given HW, but Davinsy does not require model compression as its quasi-real-time built model is already optimized for fast inference.

Fast inference finds its best thanks to Davinsy’s real-time pipeline.

Link with reinforcement learning

Bondzai continuous even-driven learning can be seen as Deep reinforcement learning (Deep RL) through closed-loop control. In Deeplomath RL strategy, the agent is the Deeplomath convolution function network model. The question is then how to improve the agent behavior through short-term/immediate rewards?

Deeplomath proposes 3 types of short-term rewards:

- Positive: when a behavior (inference) is correct, reward it by confirmation.

- Negative: when a behavior (inference) is wrong, correct it. This event will be added to the learning dataset.

- Extinction: when a behavior (inference) is correct but unsuitable, Davinsy removes some corresponding scenarios from the dataset.

Deeplomath implements a combination of value and policy-based RL to improve long-term return (fewer negative or extinction rewards) under Deeplomath learning policy through short-term rewards.

Let us emphasis that positive/negative do not mean good/bad, both strategies are useful. The actions are deterministic (but not uniform) and not stochastic. Deeplomath DeepRL is model-free (no predictive model for future input data).

Let us recall the RL vocabulary and connections with Deeplomath:

- Agent (CFN): entity which performs actions in an environment to gain some reward : positive, negative or extinction.

- Environment: A new scenario the agent has to face will be represented through the state (signature) for a new inference.

- Reward (R): short-term return given to an agent when he or she performs specific action or task: positive, negative or extinction rewards impacting Deeplomath learning dataset.

- Policy: Strategy applied by the agent to decide the next action based on the current state: should I upgrade myself or keep current model? Has the dataset been modified enough through recent rewards? This is orchestrated by Maestro, Davinsy’s orchestrator.

- Value (V): Expected long-term return with discount, compared to the short-term reward: fewer negative or extinction rewards with time.

[1] Deeplomath model is based on a generalization of convolution neural network where the parameters of the model are not constant anymore but functions. We call the model a convolution function network.

[2] The word “improve” needs to be dissected. It points to the subjacent iterative optimization algorithms minimizing some loss function in order to adapt the parameters of the network. Deeplomath, on the other hand, does not “improve” the model, but rebuild it from scratch thanks to an original direct and not iterative algorithm.